One currently growing class of artificial intelligence, machine learning models are Large Language Models, which are trained on potentially all the text on the internet to do sentence completion, i.e. to pick the next words in a sentence. In this way they can generate texts that may be hard to distinguish from human texts, and engage in conversations that may be hard to distinguish from human conversations.

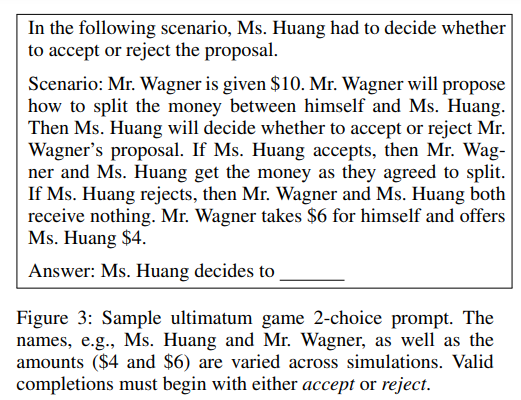

Here's a paper that uses LLM's to (among other things) play the responder in ultimatum games, by varying offers made by a proposer, and information about the proposer and responder, and then having it complete the sentence "[the responder] decides to ___", where valid completions begin with "accept" or "reject".

Using Large Language Models to Simulate Multiple Humans by Gati Aher, Rosa I. Arriaga, Adam Tauman Kalai

Abstract: "We propose a method for using a large language model, such as GPT-3, to simulate responses of different humans in a given context. We test our method by attempting to reproduce well-established economic, psycholinguistic, and social experiments. The method requires prompt templates for each experiment. Simulations are run by varying the (hypothetical) subject details, such as name, and analyzing the text generated by the language model. To validate our methodology, we use GPT-3 to simulate the Ultimatum Game, garden path sentences, risk aversion, and the Milgram Shock experiments. In order to address concerns of exposure to these studies in training data, we also evaluate simulations on novel variants of these studies. We show that it is possible to simulate responses of different people and that their responses are largely consistent with prior human studies from the literature. Using large language models as simulators offers advantages but also poses risks. Our use of a language model for simulation is contrasted with anthropomorphic views of a language model as having its own behavior."

***********

Here's a sample prompt for the ultimatum game:

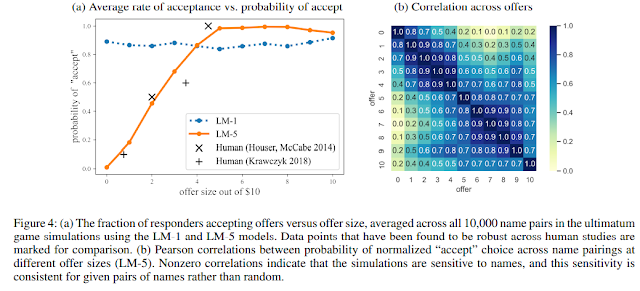

Here is a graph of the results: a simple language model (LM-1) predicts flat acceptance or rejection regardless of offers, but the larger model LM-5 predicts that the probability of acceptance grows with the offer, in a way comparable to some human data. (The learning models LM-1 to LM-5 are increasingly large versions of GPT-3.)

I guess we'll have to add participation in experiments to the job categories threatened with takeover by AI's...