Monday, October 3, 2022

Choosing (as if) from a menu (by Gonczarowski, Heffetz and Thomas; and by Bó, and Hakimov)

Thursday, September 29, 2022

What is needed to gain support for effective algorithms in hiring, etc?

Here's an experiment motivated in part by European regulations on transparency of algorithms.

Aversion to Hiring Algorithms: Transparency, Gender Profiling, and Self-Confidence by Marie-Pierre Dargnies, Rustamdjan Hakimov and Dorothea Kübler

Abstract: "We run an online experiment to study the origins of algorithm aversion. Participants are either in the role of workers or of managers. Workers perform three real-effort tasks: task 1, task 2, and the job task which is a combination of tasks 1 and 2. They choose whether the hiring decision between themselves and another worker is made either by a participant in the role of a manager or by an algorithm. In a second set of experiments, managers choose whether they want to delegate their hiring decisions to the algorithm. In the baseline treatments, we observe that workers choose the manager more often than the algorithm, and managers also prefer to make the hiring decisions themselves rather than delegate them to the algorithm. When the algorithm does not use workers’ gender to predict their job task performance and workers know this, they choose the algorithm more often. Providing details on how the algorithm works does not increase the preference for the algorithm, neither for workers nor for managers. Providing feedback to managers about their performance in hiring the best workers increases their preference for the algorithm, as managers are, on average, overconfident."

"Our experiments are motivated by the recent debates in the EU over the legal requirements for algorithmic decisions. Paragraph 71 of the preamble to the General Data Protection Regulation (GDPR) requires data controllers to prevent discriminatory effects of algorithms processing sensitive personal data. Articles 13 and 14 of the GDPR state that, when profiling takes place, people have the right to “meaningful information about the logic involved” (Goodman and Flaxman 2017). While the GDPR led to some expected effects, e.g., privacy-oriented consumers opting out of the use of cookies (Aridor et al. 2020), the discussion over the transparency requirements and the constraints on profiling is still ongoing. Recently, the European Parliament came up with the Digital Services Act (DSA), which proposes further increasing the requirements for algorithm disclosure and which explicitly requires providing a profiling-free option to users, together with a complete ban on the profiling of minors. Our first treatment that focuses on the workers aims at identifying whether making the algorithm gender-blind and therefore unable to use gender to discriminate, as advised in the preamble of the GDPR and further strengthened in the proposed DSA, increases its acceptance by the workers. The second treatment is a direct test of the importance of the transparency of the algorithm for the workers. When the algorithm is made transparent in our setup, it becomes evident which gender is favored. This can impact algorithm aversion differently for women and men, for example if workers’ preferences are mainly driven by payoff maximization.

"The treatments focusing on the managers’ preferences aim at understanding why some firms are more reluctant than others to make use of hiring algorithms. One possible explanation for not adopting such algorithms is managerial overconfidence. Overconfidence is a common bias, and its effect on several economic behaviors has been demonstrated (Camerer et al. 1999, Dunning et al. 2004, Malmendier and Tate 2005, Dargnies et al. 2019). In our context, overconfidence is likely to induce managers to delegate the hiring decisions to the algorithm too seldom. Managers who believe they make better hiring decisions than they actually do, may prefer to make the hiring decisions themselves. Our paper will provide insights about the effect of overconfidence on the delegation of hiring decisions to algorithms. Similar to the treatments about the preferences of workers, we are also interested in the effect of the transparency of the algorithm on the managers’ willingness to delegate the hiring decisions. Disclosing the details of the algorithm can increase the managers’ trust in the algorithm."

Wednesday, December 1, 2021

School choice using deferred acceptance algorithms increases competition for selective schools, by Terrier, Pathak and Ren

Here's a working paper from the LSE which concludes that making it safe for parents to truthfully report their preferences increases the competition for selective schools (called grammar schools, which prioritize students based on admission tests), with the unintended consequence of disadvantaging poorer families in England. The paper contains a good description of past and present school assignment regimes in England.

From immediate acceptance to deferred acceptance: effects on school admissions and achievement in England by Camille Terrier Parag A. Pathak and Kevin Ren, Centre for Economic Performance Discussion Paper No.1815, November 2021

"Abstract: Countries and cities around the world increasingly rely on centralized systems to assign students to schools. Two algorithms, deferred acceptance (DA) and immediate acceptance (IA), are widespread. The latter is often criticized for harming disadvantaged families who fail to get access to popular schools. This paper investigates the effect of the national ban of the IA mechanism in England in 2008. Before the ban, 49 English local authorities used DA and 16 used IA. All IA local authorities switched to DA afterwards, giving rise to a cross-market difference-in-differences research design. Our results show that the elimination of IA reduces measures of school quality for low-SES students more than high-SES students. After the ban, low-SES students attend schools with lower value-added and more disadvantaged and low-achieving peers. This effect is primarily driven by a decrease in low-SES admissions at selective schools. Our findings point to an unintended consequence of the IA to DA transition: by encouraging high-SES parents to report their preferences truthfully, DA increases competition for top schools, which crowds out low-SES students."

And here are the paper's concluding sentences:

" In England, selective schools pick students based on test scores, which favors high-SES parents. After the transition to DA, high-SES parents enroll at these schools at higher rates. Selective admissions are widespread throughout education, so our results provide an important caution to equity rationales for DA over IA in settings where selective schools have large market share."

Wednesday, September 15, 2021

School choice in Latin America, in BBC News Mundo

A news story with an unusually detailed account of school choice algorithms discusses some of the issues in Latin America, in Spanish, in BBC News Mundo. (Google translate does an adequate job...). One of the issues discussed is that geographic priorities for schools give wealthier families an advantage, and perpetuate geographic segregation.

Qué es el Mecanismo de Boston y por qué expertos denuncian que hay segregación en las asignaciones de escuelas en América Latina Analía Llorente BBC News Mundo

[G translate: What is the Boston Mechanism and why experts denounce that there is segregation in school assignments in Latin America]

Some snippets:

"The Boston mechanism allows for a lot of parenting strategy and that means that it generates a lot of inequalities " [says] Paula Jaramillo, Associate Professor in Economics at the Universidad de los Andes in Colombia

...

"The criticism is against the Boston Mechanism because it continues to be applied, but it is also against the deferred acceptance method because it is generating segregation at the neighborhood level," says Caterina Calsamiglia, a leader in the investigation of these methods.

"The specialist refers to the fact that a student obtains more points for his proximity to the preferred school, therefore he has a greater chance of being admitted to it.

"This creates one of the main strategies is the moving neighborhood, decision can only carry out families with middle income to high, creating inequality."

...

"In many places in Latin America the selection method is not even regulated, and where it is, they are not very transparent with parents in communicating the methods.

"We know a lot about what is done by cities in the United States, in Europe, in Asia, we don't know so much about Latin America," says Paula Jaramillo.

...

"In conclusion, the experts believe that there is no magic method that can be applied uniformly in the countries of the region to avoid segregation and inequality in school selection.

"They agree that the deferred acceptance method is the "fairest" but not perfect. There are many factors to take into account from the quality of the schools to their location."

Sunday, August 15, 2021

Fair algorithms for selecting citizen assemblies, in Nature

Here's a paper that grapples with the problem that not every group in society is equally likely to accept an appointment for which they have been selected, which complicates the problem of selecting representative committees while also giving each potential member approximately the same chance of being selected.

Fair algorithms for selecting citizens’ assemblies. by Bailey Flanigan, Paul Gölz, Anupam Gupta, Brett Hennig & Ariel D. Procaccia, Nature (2021). https://doi.org/10.1038/s41586-021-03788-6

Abstract: Globally, there has been a recent surge in ‘citizens’ assemblies’1, which are a form of civic participation in which a panel of randomly selected constituents contributes to questions of policy. The random process for selecting this panel should satisfy two properties. First, it must produce a panel that is representative of the population. Second, in the spirit of democratic equality, individuals would ideally be selected to serve on this panel with equal probability2,3. However, in practice these desiderata are in tension owing to differential participation rates across subpopulations4,5. Here we apply ideas from fair division to develop selection algorithms that satisfy the two desiderata simultaneously to the greatest possible extent: our selection algorithms choose representative panels while selecting individuals with probabilities as close to equal as mathematically possible, for many metrics of ‘closeness to equality’. Our implementation of one such algorithm has already been used to select more than 40 citizens’ assemblies around the world. As we demonstrate using data from ten citizens’ assemblies, adopting our algorithm over a benchmark representing the previous state of the art leads to substantially fairer selection probabilities. By contributing a fairer, more principled and deployable algorithm, our work puts the practice of sortition on firmer foundations. Moreover, our work establishes citizens’ assemblies as a domain in which insights from the field of fair division can lead to high-impact applications.

...

"To our knowledge, all of the selection algorithms previously used in practice (Supplementary Information section 12) aim to satisfy one particular property, known as ‘descriptive representation’ (that the panel should reflect the composition of the population)16. Unfortunately, the pool from which the panel is chosen tends to be far from representative. Specifically, the pool tends to overrepresent groups with members who are on average more likely to accept an invitation to participate, such as the group ‘college graduates’.

...

"Selection algorithms that pre-date this work focused only on satisfying quotas, leaving unaddressed a second property that is also central to sortition: that all individuals should have an equal chance of being chosen for the panel.

...

"Although it is generally impossible to achieve perfectly equal probabilities, the reasons to strive for equality also motivate a more gradual version of this goal: making probabilities as equal as possible, subject to the quotas. We refer to this goal as ‘maximal fairness’.

...

"the algorithms in our framework (1) explicitly compute a maximally fair output distribution and then (2) sample from that distribution to select the final panel (Fig. 1). Crucially, the maximal fairness of the output distribution found in the first step makes our algorithms optimal. To see why, note that the behaviour of any selection algorithm on a given instance is described by some output distribution; thus, as our algorithm finds the fairest possible output distribution, it is always at least as fair as any other algorithm."

Friday, August 13, 2021

Generalizing deferred acceptance in many to one matching with contracts, by Hatfield, Kominers and Westkamp in RESTUD

Stability, Strategy-Proofness, and Cumulative Offer Mechanisms, by John William Hatfield, Scott Duke Kominers, Alexander Westkamp, The Review of Economic Studies, Volume 88, Issue 3, May 2021, Pages 1457–1502, https://doi.org/10.1093/restud/rdaa052

Abstract: We characterize when a stable and strategy-proof mechanism is guaranteed to exist in the setting of many-to-one matching with contracts. We introduce three novel conditions—observable substitutability, observable size monotonicity, and non-manipulability via contractual terms—and show that when these conditions are satisfied, the cumulative offer mechanism is the unique mechanism that is stable and strategy-proof (for workers). Moreover, we show that our three conditions are, in a sense, necessary: if the choice function of some firm fails any of our three conditions, we can construct unit-demand choice functions for the other firms such that no stable and strategy-proof mechanism exists. Thus, our results provide a rationale for the ubiquity of cumulative offer mechanisms in practice.

Tuesday, February 18, 2020

Market design and algorithmic criminal justice--by Jung, Kannan, Lee, Pai, Roth and Vohra

Fair Prediction with Endogenous Behavior

Christopher Jung, Sampath Kannan, Changhwa Lee, Mallesh M. Pai, Aaron Roth,and Rakesh Vohra

February 17, 2020

Abstract: There is increasing regulatory interest in whether machine learning algorithms deployed in consequential domains (e.g. in criminal justice) treat different demographic groups “fairly.” However, there are several proposed notions of fairness, typically mutually incompatible. Using criminal justice as an example, we study a model in which society chooses an incarceration rule. Agents of different demographic groups differ in their outside options (e.g. opportunity for legal employment) and decide whether to commit crimes. We show that equalizing type I and type II errors across groups is consistent with the goal of minimizing the overall crime rate; other popular notions of fairness are not.

*********

And here's a blog post about the paper by one of the authors:

Fair Prediction with Endogenous Behavior

Can Game Theory Help Us Choose Among Fairness Constraints?

"...The crime-minimizing solution is the one that sets different thresholds on posterior probabilities (i.e. uniform thresholds on signals) so as to equalize false positive rates and false negative rates. In other words, to minimize crime, society should explicitly commit to not conditioning on group membership, even when group membership is statistically informative for the goal of predicting crime.

"Why? Its because although using demographic information is statistically informative for the goal of predicting crime when base rates differ, it is not something that is under the control of individuals --- they can control their own choices, but not what group they were born into. And making decisions about individuals using information that is not under their control has the effect of distorting their dis-incentive to commit crime --- it ends up providing less of a dis-incentive to individuals from the higher crime group (since they are more likely to be wrongly incarcerated even if they don't commit a crime). And because in our model people are rational actors, minimizing crime is all about managing incentives. "

Tuesday, September 24, 2019

Algorithms and intelligence at Penn

The human driver

As the ability to harness the power of artificial intelligence grows, so does the need to consider the difficult decisions and trade-offs humans make about privacy, bias, ethics, and safety.

"Already, some AI-enabled practices have raised serious concerns, like the ability to create deepfake videos to put words in someone’s mouth, or the growing use of facial recognition technology in public places. Automated results that turned out to reflect racial or gender bias has prompted some to say the programs themselves are racist.

"But the problem is more accidental than malicious, says Penn computer scientist Aaron Roth. An algorithm is a tool, like a hammer—but while it would make no sense to talk about an “ethical” hammer, it’s possible to make an algorithm better through more thoughtful design.

“It wouldn’t be a moral failure of the hammer if I used it to hit someone. The ethical lapse would be my own,” he says. “But the harms that algorithms ultimately do are several degrees removed from the human beings, the engineers, who are designing them.”

"Roth and other experts acknowledge it’s a huge challenge to push humans to train the machines to emphasize fairness, privacy, and safety. Already, experts across disciplines, from engineering and computer science to philosophy and sociology, are working to translate vague social norms about fairness, privacy, and more into practical instructions for the computer programs. That means asking some hard questions, Roth says.

“Of course, regulation and legal approaches have an important role to play, but I think that by themselves they are woefully insufficient,” says Roth, whose book, “The Ethical Algorithm,” with Penn colleague Michael Kearns will be published in November.

The sheer size of the data sets can make transparency difficult, he adds, while at the same time revealing errors more easily."

*************

Listen also to

The programming ethos

In a podcast conversation, Penn professors Michael Kearns, Aaron Roth, and Lisa Miracchi discuss the ethics of artificial intelligence.

Tuesday, September 3, 2019

Efficiency and Stability in Large Matching Markets, by Che and Tercieux in the JPE

"This “staged” version of DA resembles standard DA except for one crucial difference: the mechanism periodically terminates a stage and finalizes the tentative assignment up to that point. The event triggering the termination of a stage is an agent reaching a threshold number of offers. Intuitively, the mechanism activates a “circuit breaker” whenever the competition “overheats” to such an extent that an agent finds himself at the risk of losing an object he ranks highly to an agent who ranks it relatively lowly (more precisely, above the threshold rank). This feature ensures that each object assigned at each stage goes to an agent who ranks it relatively highly among the objects available at that stage."

Wednesday, August 28, 2019

Matching in Google's internal labor market

Abstract: "This case describes how Google designed and launched an internal matching market to assign individual workers with projects and managers. The case evaluates how marketplace design considerations—and several alternative staffing models—could affect the company’s goals and workers’ well-being. It discusses the details of implementation as well as the intended (and unintended) consequences of the internal match system. The case concludes with a debate about how the Chameleon marketplace could expand to include more Googlers and illustrates what to consider when thinking about launching new matching markets in organizations."

"Kuehn and her team launched the Chameleon program at the end of 2015 to optimize employees’ careers and Google’s business needs. Unlike most internal staffing paradigms, Chameleon did not rely on a central HR coordinator to assign the unit’s hundreds of individual contributors (ICs) to roles in its dozens of teams. Nor did Chameleon rely on self-initiated transfers, nor ad hoc, centrally planned reorganizations.

"Instead, under Chameleon, a staffing marketplace would open up three times during the year. At the start of each round, ICs would use Chameleon’s online platform to submit private rankings of the available roles. In turn, the ICs would be ranked by the manager responsible for each open role. The Chameleon platform would then turn these rankings into matches using a simple but robust marketplace algorithm, assigning ICs to roles for the next 6–18 months."

*******

Not a spoiler: It's a deferred acceptance algorithm...

A big role is played by a pseudonymous Googler who the case calls Bradford Preston, who was familiar with the market design literature and who "moved to a part-time role so that he could begin a PhD in economics."

There's much more, about getting this kind of internal marketplace adopted. And apparently new Googlers are called Nooglers.

Tuesday, July 30, 2019

Speed bumps for high frequency trading

More Exchanges Add ‘Speed Bumps,’ Defying High-Frequency Traders

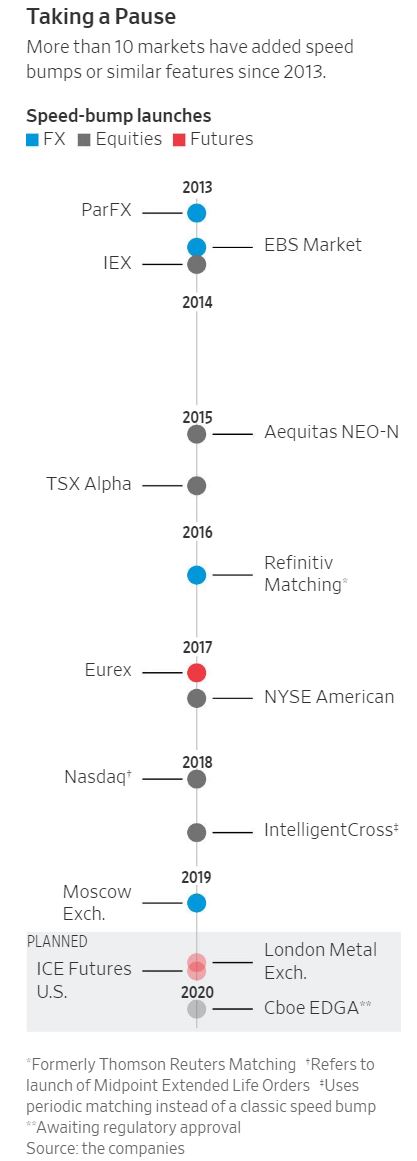

Over a dozen financial markets plan mechanisms that impose a split-second delay before executing trades by By Alexander Osipovich.

"By 2020, more than a dozen markets in stocks, futures and currencies from Toronto to New York to Moscow will slow down trading via speed bumps or similar features, if all the current planned launches are carried out. Five years ago, only a few markets had speed bumps.

...

"Among the exchanges set to debut their first speed bumps are the London Metal Exchange, which plans to add an eight-millisecond delay to gold and silver futures later this year. Chicago-based Cboe Global Markets hopes to add a speed bump on its EDGA stock exchange in 2020, if it wins regulatory approval.

"LME, Cboe and other markets adopting speed bumps say they want to neutralize “latency arbitrage,” a strategy in which a fast trader takes advantage of a moving price before other players can react.

...

"Cboe’s proposal would force the HFT firm to wait four milliseconds before buying the Ford shares. But the same delay wouldn’t apply if the investor sent Cboe an electronic message canceling his or her $10.00 sell order. That gives the investor a brief window of time to avoid being picked off by the faster trade.

"Most of the latest speed-bump plans have a similar, “asymmetrical” design, meaning they don’t apply equally to all trades. Such speed bumps are typically meant to favor players who publicly quote prices on an exchange, rather than those who attempt to buy or sell using those prices."

Thursday, April 25, 2019

Should NYC school choice diversify school assignments to match applicant demographics?

Here's a report from FastCompany:

How to fix segregation in NYC schools? Let students hack the algorithm

A Nobel Prize winner’s algorithm helps decide which students are placed in which New York schools. A team of students is trying to upgrade it.

"Many of the most desirable, highest-performing schools have a gross disparity between the racial breakdown of who applies versus who eventually attends those schools.

"Data acquired from the Department of Education by IntegrateNYC through a freedom of information request and provided to Fast Company bleakly demonstrates this point. For instance, while white students accounted for one quarter of students who applied in 2017 to Beacon High School, 42% of that Fall’s freshman class was white. At Eleanor Roosevelt High School, 16% of applicants that year were black, yet less than 1% of admitted students were black.

"Part of the problem is that the education children receive from kindergarten to eighth grade is not equal. Students who live in more affluent, largely white neighborhoods have better middle schools, which better prepare students for high school entrance exams. Students from wealthier families are also more likely to be able to afford private test prep for their students. But the city’s current admissions process does nothing to correct this.

...

"The solution students came up with was to create a new matchmaking algorithm that prioritizes factors highly correlated with race such as a student’s census tract, whether they receive free or reduced-price lunch, and whether English is their second language. Such an algorithm would boost disadvantaged students higher up in the matchmaking process, provided they have already passed a school’s screening process."

***********

In NYC, school principals have a lot of agency in determining the input of the school matching algorithm, in the form of preference lists for their schools. The city (i.e. the NYCDOE) provides guidelines for schools. So another approach to achieving more and different diversity would be to provide different guidelines and requirements for schools, that would change the inputs to the matching algorithm (the schools' rank order lists of students), rather than trying to modify the algorithm. My guess is that this would be a more effective, nuanced, and flexible approach.

Friday, March 8, 2019

Why is it hard for securities exchanges to restore price competition (instead of speed competition)?

Will the Market Fix the Market?A Theory of Stock Exchange Competition and Innovation

Eric Budish, Robin S. Lee and John J. Shim

February 27, 2019

Abstract As of early 2019, there are 13 stock exchanges in the U.S., across which over 1 trillion shares ($50 trillion) are traded annually. All 13 exchanges use the continuous limit order book market design, a design that gives rise to latency arbitrage—arbitrage rents from symmetrically observed public information—and the associated high-frequency trading arms race (Budish, Cramton and Shim, 2015). Will the market adopt new market designs that address the negative aspects of high-frequency trading? This paper builds a theoretical model of stock exchange competition to answer this question. Our model, shaped by institutional details of the U.S. equities market, shows that under the status quo market design: (i) trading behavior across the many distinct exchanges is as if there is just a single “synthesized” exchange; (ii) competition among exchanges is fierce on the dimension of traditional trading fees; but (iii) exchanges capture and maintain significant economic rents from the sale of speed technology—arms for the arms race. Using a variety of data, we document seven stylized empirical facts that align with these predictions. We then use the model to examine the private and social incentives for market design innovation. We show that the market design adoption game among exchanges is a repeated prisoner’s dilemma. If an exchange adopts a new market design that eliminates latency arbitrage, it would win share and earn economic rents; perhaps surprisingly, the usual coordination problems associated with getting a new market design off the ground are not central. However, imitation by other exchanges would result in an equilibrium that resembles the status quo with competitive trading fees, but now without the rents from the speed race. We conclude that although the social returns to adoption are large, the private returns are much smaller for an entrant exchange and negative for an incumbent that currently derives rents from the inefficiencies that the new design eliminates. Nevertheless, our analysis does not imply that a market-wide market design mandate is necessary. Rather, it points to a more circumscribed policy response that would tip the balance of incentives and encourage the “market to fix the market.”

Tuesday, September 18, 2018

A school choice mechanism from Taiwan, by Dur, Pathak, Song and Sönmez

Deduction Dilemmas: The Taiwan Assignment Mechanism

Umut M. Dur, Parag A. Pathak, Fei Song, Tayfun Sönmez

Issued in September 2018

NBER Program(s):Economics of Education, Labor Studies

"Loosely speaking, in Taiwan’s deduction system, a students priority is reduced based on the order in which preferences are ranked. Table 1 lists the schedule of points employed in 2014 across the largest districts in Taiwan. For instance, in Jibei, the largest district with over 60,000 applicants, a student’s score at her second choice is reduced by 1, the score at her third choice is reduced by 2, and so on. In Yunlin district, no points are deducted for the first four choices, and 2 points are 3 deducted from choices five through eight. The deduction system has now been in place for the last five years, from 2014-2018."

This system of deductions is used to determine each student's priority at each school, and allocations are then determined, by a deferred acceptance algorithm, based on student preferences and these school priorities.

Monday, February 19, 2018

Making algorithms fair

My attention was drawn to this news story about ongoing work by U. Penn computer scientists: Combatting ‘Fairness Gerrymandering’ with Socially Conscious Algorithms

|

Preventing Fairness Gerrymandering: Auditing and Learning for Subgroup Fairness

The most prevalent notions of fairness in machine learning are statistical definitions: they fix a small collection of pre-defined groups, and then ask for parity of some statistic of the classifier across these groups. Constraints of this form are susceptible to intentional or inadvertent "fairness gerrymandering", in which a classifier appears to be fair on each individual group, but badly violates the fairness constraint on one or more structured subgroups defined over the protected attributes. We propose instead to demand statistical notions of fairness across exponentially (or infinitely) many subgroups, defined by a structured class of functions over the protected attributes. This interpolates between statistical definitions of fairness and recently proposed individual notions of fairness, but raises several computational challenges. It is no longer clear how to audit a fixed classifier to see if it satisfies such a strong definition of fairness. We prove that the computational problem of auditing subgroup fairness for both equality of false positive rates and statistical parity is equivalent to the problem of weak agnostic learning, which means it is computationally hard in the worst case, even for simple structured subclasses.

We then derive two algorithms that provably converge to the best fair classifier, given access to oracles which can solve the agnostic learning problem. The algorithms are based on a formulation of subgroup fairness as a two-player zero-sum game between a Learner and an Auditor. Our first algorithm provably converges in a polynomial number of steps. Our second algorithm enjoys only provably asymptotic convergence, but has the merit of simplicity and faster per-step computation. We implement the simpler algorithm using linear regression as a heuristic oracle, and show that we can effectively both audit and learn fair classifiers on real datasets.

Friday, November 17, 2017

"Open algorithms" law proposed in New York City

Automated processing of data for the purposes of targeting services, penalties, or policing to persons.

Title: A Local Law to amend the administrative code of the city of New York, in relation to automated processing of data for the purposes of targeting services, penalties, or policing to persons

| Summary: | This bill would require agencies that use algorithms or other automated processing methods that target services, impose penalties, or police persons to publish the source code used for such processing. It would also require agencies to accept user-submitted data sets that can be processed by the agencies’ algorithms and provide the outputs to the user. |

Monday, November 28, 2016

Fairness for Digital Infrastructure conference, January 19-20, 2017, at Penn

"A large part of our digital infrastructure is designed to automate decision making, and ideally should improve economic efficiency. Automated decisions now govern, among many other things, whether we are approved for credit cards, what advertisements we are shown, and what search results we see. Algorithms determine the government’s perception of an individual’s risk (e.g. at airport security) or trustworthiness (e.g. in evaluating recidivism risk for parole decisions). The aim of the workshop is to better understand issues surrounding “unfairness” associated with the use of machine learning and automated decision making. This includes the frictions that are the cause of such inadvertent unfairness, and novel technical solutions to solve these problems.

This workshop will take place at the University of Pennsylvania in Philadelphia on January 19th and 20th 2017.

Co-organizers: Sampath Kannan, Jamie Morgenstern, Mallesh Pai, Aaron Roth, Rakesh Vohra"

Sunday, November 27, 2016

An interview with computer scientist Cynthia Dwork

How to Force Our Machines to Play Fair

The computer scientist Cynthia Dwork takes abstract concepts like privacy and fairness and adapts them into machine code for the algorithmic age.

Here are some earlier news stories about Apple's introduction of differential privacy to the iPhone, which I've been following for a number of reasons.

From TechCrunch: What Apple’s differential privacy means for your data and the future of machine learning

From Wired: Apple’s ‘Differential Privacy’ Is About Collecting Your Data—But Not Your Data

Apple's differential privacy analyzes the group, protects the individual

Posted on June 21, 2016

Monday, November 7, 2016

Sociology of high frequency trading

Article

Pages: 166-200

Published online: 26 Sep 2016

|

Article

|

Article

|

Article

|

Article

|

*************

A related working paper I enjoyed reading, on Donald MacKenzie's website:

How Algorithms Interact: Goffman’s ‘Interaction Order’ in Automated Trading

Donald MacKenzie

April 2016

Tuesday, September 20, 2016

Stable matching for university admissions in Vietnam?

Kỳ thi THPT Quốc Gia 2016

Về việc áp dụng thuật toán DAA của Gale-Shapley trong xét tuyển – Đối Thoại Giáo Dục

Google translate renders the headline this way:

"National high school exams in 2016

On application of the algorithm of Gale-Shapley DAA in admissions - Dialogue Education"